Full Stack Fest in Barcelona

One of the highlights of this summer was attending the Full Stack Fest in Barcelona. The Full Stack Fest is a language agnostic conference that focuses on the future of the web. The conference was outstanding, I learned a lot, met some great people and overall the conference had a positive impact in my personal development.

All talks were great sources of learning and inspiration, but there was one that was particularly inspiring for me. In this blog post, I want to share what I learned from this talk.

Avoiding Digital Bias by Adam L. Smith

The talk on avoiding digital bias given by Adam L. Smith was one of my favorite talks during the conference.

From Adam’s talk I learned that machine learning is a field of computer science that gives computer systems the ability to learn rather than be explicitly programmed. Developers working on machine learning algorithms analyze data, extract features from the data that they want, and train models to behave in a certain way.

The use of Artificial Intelligence and machine learning is impacting a broad range of fields. For example, machine learning is already helping predict severe weather patterns, detect privacy breaches in healthcare, and it is affecting the way repairs are performed on critical infrastructure.

While the impact that machine learning algorithms can have on science and society is promising, Smith argued that careful thinking and planning are required to avoid inappropriate bias. As he pointed out, “Machine learning can’t be fair or just unless it is given fair and just data.”

For counting, sorting, grouping and extracting certain types of patterns, machine learning is a useful tool. However, moral values and the ability to transfer high level knowledge from one domain to another is something that current algorithms are not equipped to handle well – although research is being conducted to correct this.

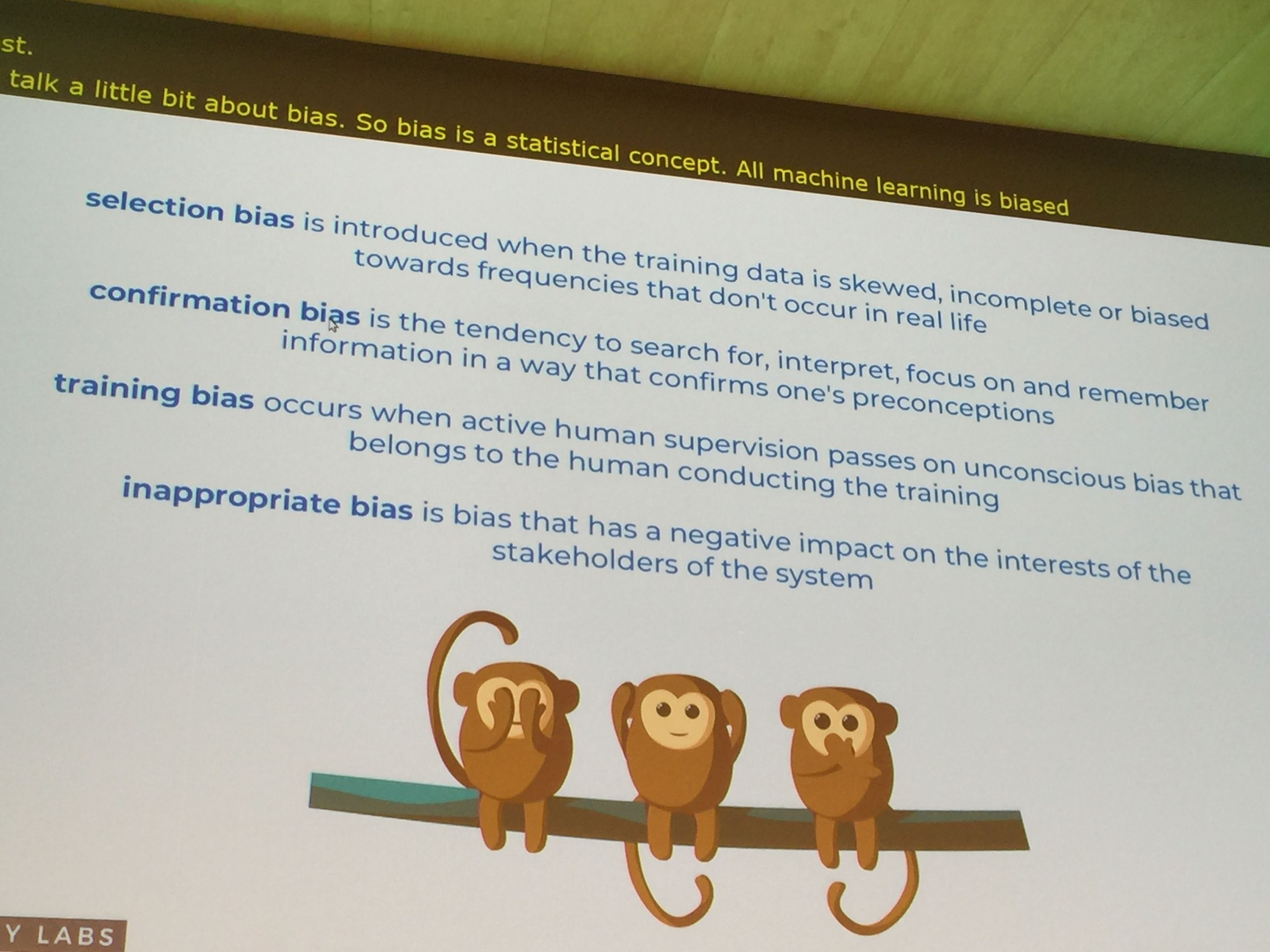

One of Smith’s main points was that, some bias is natural, but when personal data such as gender, age, race, socio-economic background, political preference, etc., are processed by machine learning algorithms, there is a significant and unique risk for unfair bias.

Smith argued that great care is needed to eliminate unwanted bias. During his presentation, selection bias, confirmation bias, training bias, and inappropriate bias were discussed and eye-opening real world examples of how these types of biases appeared in various projects was presented.

Real world examples of bias

An example presented by Smith highlighted findings from researchers at Carnegie Mellon University. Automated testing and analysis of the Google advertising system revealed that male job seekers were shown more ads for higher paying executive jobs. One reason for this was that women clicked less on higher paying job ads, thus the model had trained itself not to display these types of advertisements to women candidates. This type of bias is problematic, because encouraging male candidates to apply for higher paying jobs further increases a bias that already happens offline.

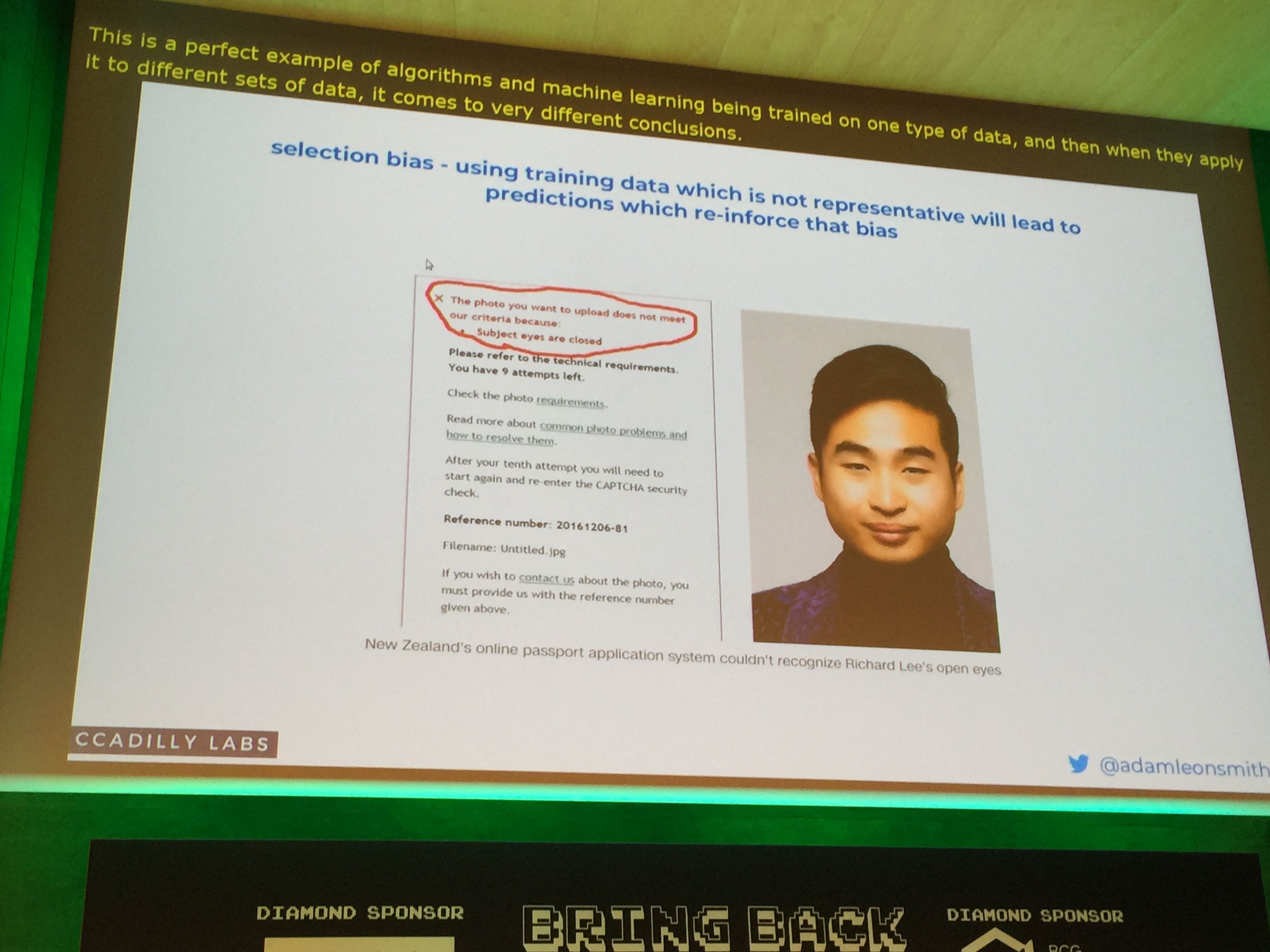

Smith also presented an example of bias in facial recognition algorithms used by the New Zealand Department of Internal Affairs to authenticate valid passport photos. A man of Asian descent had his passport photo rejected because the facial recognition software erroneously identified his eyes as closed, even though they were clearly open. This example highlights how issues can arise when models include biased data. Currently most of the facial recognition models are trained with facial data heavily skewed from the US and Europe.

Possible solutions

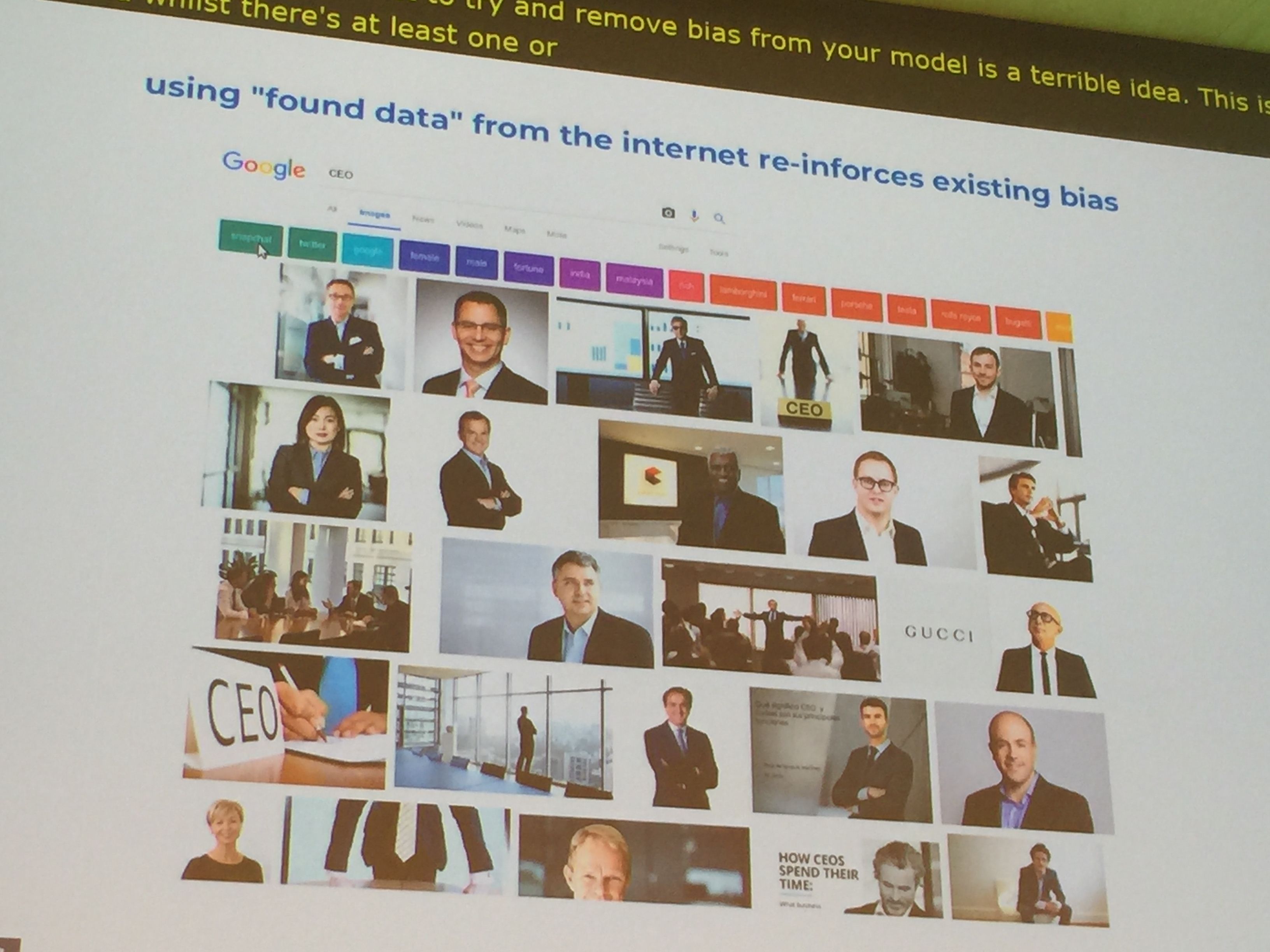

Smith highlighted ways to address these biases and provided possible pitfalls that can arise. One proposed solution for addressing bias was to increase the amount of data used to train models. Quadrupling the amount of data has shown to double the effectiveness of an algorithm. However, Smith cautioned against the risk of training models with data publicly available on the Internet as it can reinforce existing biases. This is especially dangerous as it can take years before a bias that is packaged into an algorithm is detected or corrected.

Regulation was another solution that was mentioned during the presentation. In Europe at least, good legislation that protects people from discrimination due to their identity and other protective characteristics is already in place. In addition, GDPR does offer algorithmic accountability and protection, and requires the creation of algorithms in a transparent and verifiable manner in order to mitigate against algorithmic decisions that negatively impact someone’s life, freedom, legal status or vital livelihood.

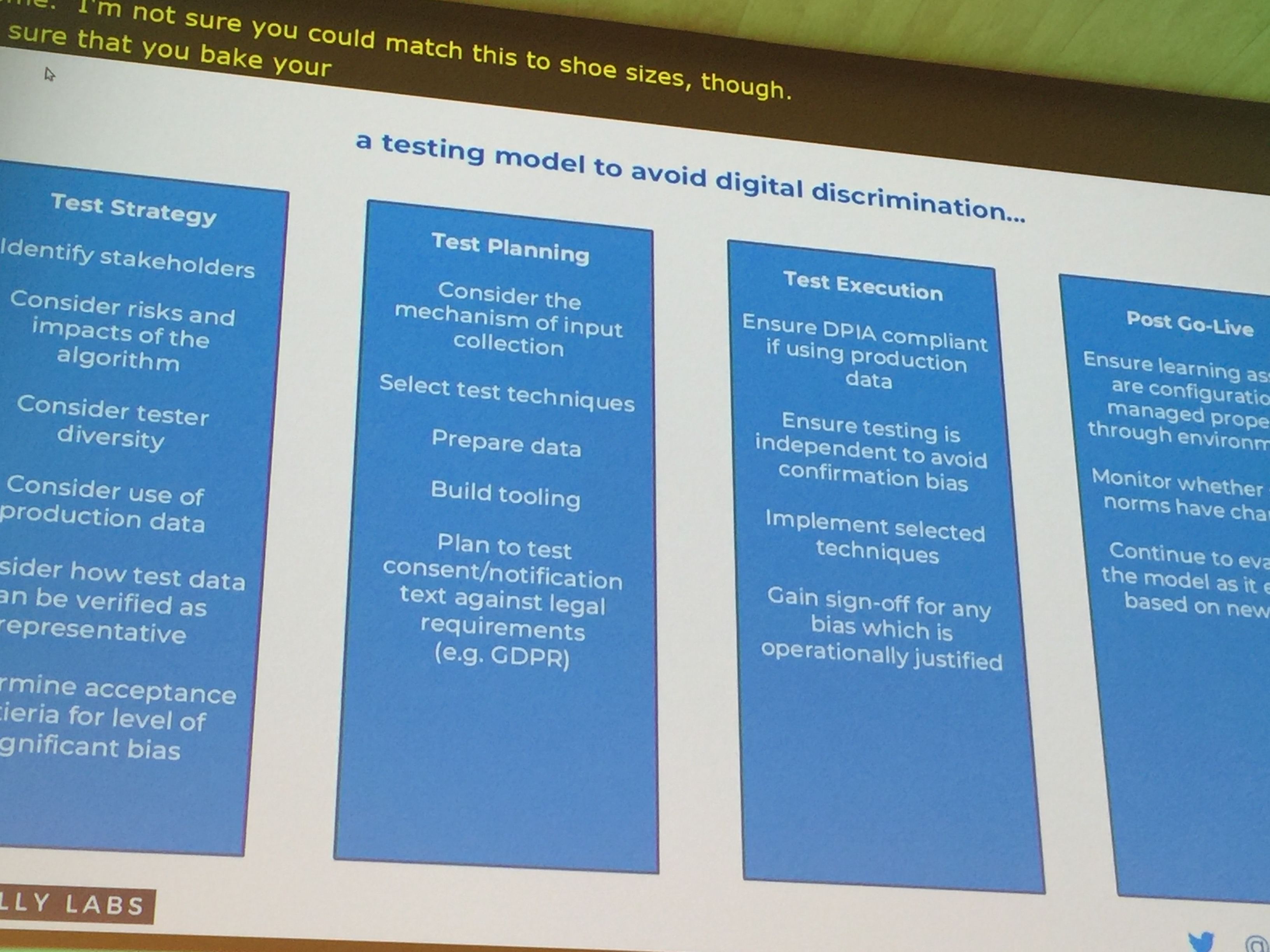

A testing model to avoid digital discrimination

The talk closed by providing a testing model to avoid digital discrimination. An important first step that needs to be taken when designing an algorithmic system is to identify the stakeholders of the system.

It is also important to consider the risk and impact of all algorithms we build. Smith argued that most of the algorithms we build are low risk and low impact. However, we still need to be vigilant. The misuse of algorithms for example in the case of political advertising, can have dire consequences for how democracies function.

To make algorithms more robust and mitigate against potential bias, Smith also proposed the need for diversity in teams who can provide a wider perspective than a homogenous group. Finally, once an algorithm goes live, it’s important to constantly re-evaluate and adapt it to changing social norms, new data, and unanticipated experiences.

Closing Thoughts

I want to thank the organizers of the Full Stack Feast in Barcelona for the opportunity to attend this wonderful conference. I also want to thank the Rails Girls Summer of Code organizers for encouraging us to attend a conference as part of our summer training. Finally, I want to thank Klaus Fleerkötter , Anemari Fiser, and Mónica Calderaro for including me in the Thoughtworks community at the conference. This made the experience less daunting, and incredibly enriching.

Keep in contact with Amalia